TripleA: An Unsupervised Domain Adaptation Framework for Nighttime VRU Detection

Apr 28, 2024· ,,,,,·

1 min read

,,,,,·

1 min read

Yuankun Wang (王元坤)

Zhenfeng Shao*

Jiaming Wang

Yu Wang

Yulin Ding

Gui Cheng

Abstract

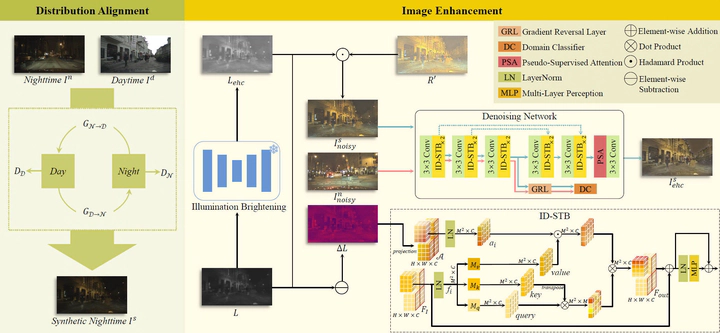

Detecting vulnerable road users (VRUs) at night presents significant challenges. Numerous methods rely heavily on annotations, yet the low visibility of nighttime images poses difficulties for labeling. To obviate the need for nighttime annotations, unsupervised domain adaptation manifests as a viable solution. However, existing approaches often focus solely on semantic-level domain shifts, neglecting the pixel-level discrepancies due to inherent degradations in the night domain, which can significantly impair machine vision. This oversight limits the effectiveness of nighttime VRU detection. To this end, TripleA, an unsupervised domain adaptation framework is introduced to achieve nighttime VRU detection. Realized through a crucial triple alignment, TripleA first aligns the distributions of the labeled daytime domain with the unlabeled nighttime domain. Then, the degraded image is enhanced in terms of illumination and noise. We present an illumination difference-aware denoising network to address the intractable noise and enable selfsupervised learning through a meticulously designed exchange-recombination strategy, which is integrated into a novel pseudosupervised attention to achieve noise distribution alignment. To further enhance the capabilities of the denoising network under real-world scenarios, we introduce degradation alignment to enforce domain-invariant degradation encoding. Extensive experiments demonstrate that our proposed framework achieves superior performance in nighttime VRU detection without relying on nighttime annotations.

Type

Publication

IEEE Transactions on Intelligent Transportation Systems. (doi=10.1109/TITS.2025.3548804)

Click the Cite button above to demo the feature to enable visitors to import publication metadata into their reference management software.

Create your slides in Markdown - click the Slides button to check out the example.

Add the publication’s full text or supplementary notes here. You can use rich formatting such as including code, math, and images.